생성적 적대 신경망을 이용한 행성의 장거리 2차원 깊이 광역 위치 추정 방법

© Korea Robotics Society. All rights reserved.

Abstract

Planetary global localization is necessary for long-range rover missions in which communication with command center operator is throttled due to the long distance. There has been number of researches that address this problem by exploiting and matching rover surroundings with global digital elevation maps (DEM). Using conventional methods for matching, however, is challenging due to artifacts in both DEM rendered images, and/or rover 2D images caused by DEM low resolution, rover image illumination variations and small terrain features. In this work, we use train CNN discriminator to match rover 2D image with DEM rendered images using conditional Generative Adversarial Network architecture (cGAN). We then use this discriminator to search an uncertainty bound given by visual odometry (VO) error bound to estimate rover optimal location and orientation. We demonstrate our network capability to learn to translate rover image into DEM simulated image and match them using Devon Island dataset. The experimental results show that our proposed approach achieves ~74% mean average precision.

Keywords:

Global Localization System, Conditional Generative Adversarial Network1. Introduction

Autonomous navigation is a necessity for planetary rover to be able to traverse long-range distances. Although there are numerous localization techniques developed to assist rover autonomous navigation, such as Visual Odometry (VO) and wheel odometry, they often suffer from growing error due to the lack of absolute reference. Filtering algorithms and bundle adjustments can effectively reduce the growing error, but they cannot totally eliminate it. Thus, for a long-range mission, a global localization algorithm is needed in which the location and orientation of the rover is estimated with respect to global absolute reference such as planetary inertial frame, Universal Transverse Mercator (UTM) frame, Topocentric frame, … etc. There are many research approaches that address the problem of planetary global localization. Multi-frame Odometry-compensated Global Alignment (MOGA) [1] uses LIDAR data and match it to Digital Elevation Map (DEM). LIDAR is often too heavy and power demanding to deploy on a rover. 3D stereo reconstruction, on the other hand, typically generates reliable point cloud up to 40m from the rover. With DEM resolution of about 2m per pixel, the accuracy global localization by matching 3D features from a single frame becomes limited. In order to accurately localize rover from a single frame, 2D images based matching is needed, since mountains, craters, skylines, and other 2D features are not mostly limited by distance.

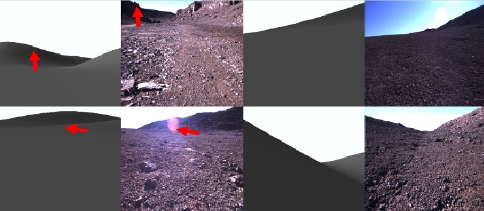

Stein et al. [2] matches skyline in rover 2D image with that rendered from DEM to estimate rover global pose. Similarly, Visual Position Estimator for Rovers (VIPER) [3,4] matches skyline in rover 2D image with that rendered from DEM using local geometrical shape features such as mountain peaks and shapes. Li Wei, et al. [5] solves the same problem as VIPER. They used more robust skyline detection by major line detection [6] method. Strong skyline was used with a more robust Bayesian Network for matching. Both approaches, however, suffers from low DEM resolution as well as artifacts in both DEM rendered images, and/or rover 2D images due to illumination variations and small terrain features as shown in [fig. 1]. Yicong Tian, et al. [7] shows that deep CNN outperforms traditional approaches in matching local and global images under variations. They use Faster R-CNN to match buildings in street-level image and geo-tagged tilted aerial photos for the purpose of geolocalization in urban environment. Similarly, Lin et al. [8] use CNN pretrained on ImageNet [11] and Places [12] to match google street view with geo-tagged tilted aerial photos. Workman et al. [9], on the other hand, use CNN pretrained on Places [12] to match street-level image with ortho-maps for estimating optimal geo-location.

Examples of artifacts that pose a challenge in matching rover images with DEM rendered images. Top Left and Bottom Right: example of rocks in rover image too small to appear in DEM. Bottom Left and Top Right: examples of illumination variations such as lens flare and shallow dynamic range

In this paper, we propose a novel Planetary Long-Range Deep 2D Global Localization Using Generative Adversarial Network. Our objective is to search a given space defined by the error bound of VO to find rover location. We divide the 6DOF space into 6D grid cells, each with specific pose for rover camera. For each cell, we simulate a virtual camera with a cell’s pose and render virtual 2D image. While simulated image does not reflect local features that are too small to be seen by DEM, the general underlying terrain and skyline are mostly preserved. We match this simulated image from the cell with true image captured by rover camera. In this case, we use initial matching by extracted 2D major lines [6] in both images. We also obtain matching score for each cell and choose the optimal cell as rover 6D Pose.

2. Long-Range Deep 2D Global Localization Network

Uncertainties in captured rover images due to local geometrical shapes that are too small to appear in DEM, and illumination variations may lead to difficulties in matching images using conventional approaches. To overcome this challenge, we propose Long-Range Deep 2D Global Localization. We use conditional generative adversarial network (cGAN) to generate “fake” DEM-simulated image corresponding to a captured rover image. We then use this “fake” pair against “true” pair of rover image and “true” DEM-simulated images to train a CNN discriminator to distinguish between the fake and true pairs, effectively matching the true correspondences.

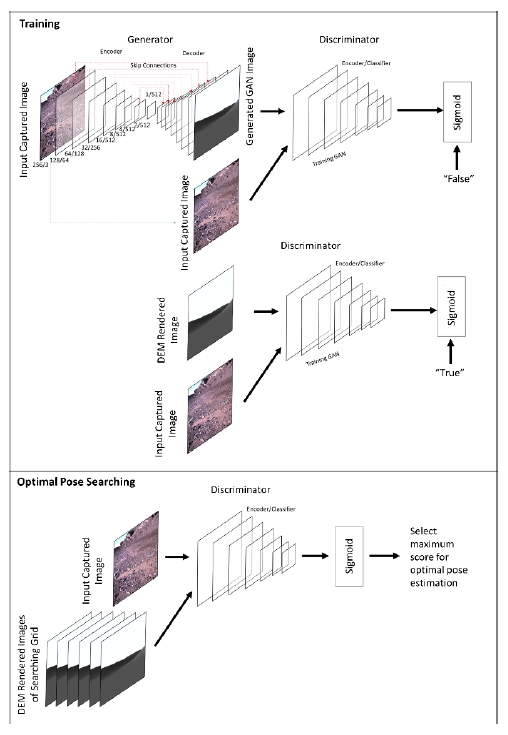

2.1 Generator

Similar to image-to-image translation [10], Generator (G) of cGAN uses “U-Net” to generate new images, this is a 15-layers encoder-decoder with skip connections between mirrored layers in the e0ncoder and decoder stacks. Input image is scaled to 256x256x3 and output image is 256x256. Each convolutional layer of the encoder uses stride of 2 to cut the spatial resolution by half. The resolution and number of filters per layer are as follows: {128/64, 64/128, 32/256, 16/512, 8/512, 4/512, 2/512, 1/512}. The decoder is a mirrored structure of the encoder with concatenated input of skip connections from each corresponding layer. We used leaky ReLu activation for all the layers and batch normalization for all but the first layer. The structure of the generator is shown in [fig. 2].

2.2 Discriminator

Discriminator (D) uses a 7-layers CNN to discriminate real and fake images’ pairs. Input pair are concatenated and each layer cuts the resolution by half. The resolution and number of filters per layer are as follows: {128/64, 64/128, 32/256, 16/512, 8/512, 4/512, 1/1}. Finally, a unit sigmoid is used to estimate matching probability between the paired input images. Similar to the generator, we used leaky ReLu activation for all the layers and batch normalization for all but the first layer. The structure of the generator is shown in [fig. 2].

2.3 Training Procedure

Let’s assume we have a dataset of pair samples: where A and B are rover and DEM simulated images respectively. For every mini-batch of paired samples, we use back propagation to minimize generator’s, and maximize discriminator’s log likelihood functions:

Where are log likelihood functions of generator and discriminator respectively, G(x, y) is generated “fake” DEM image by generator, and D(x, y) is discriminator output probability of matching x to y.

3. Experimental Results

We used Devon Island dataset [13] bundle 1 which includes 2056 images with ground truth location. For each image, we used OpenGL based renderer to generate DEM-simulated image at ground truth location. We divided the set into 80%/20% training and testing samples. We trained cGAN for 80 epochs on the training samples.

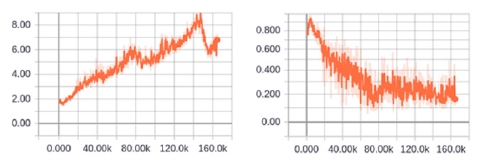

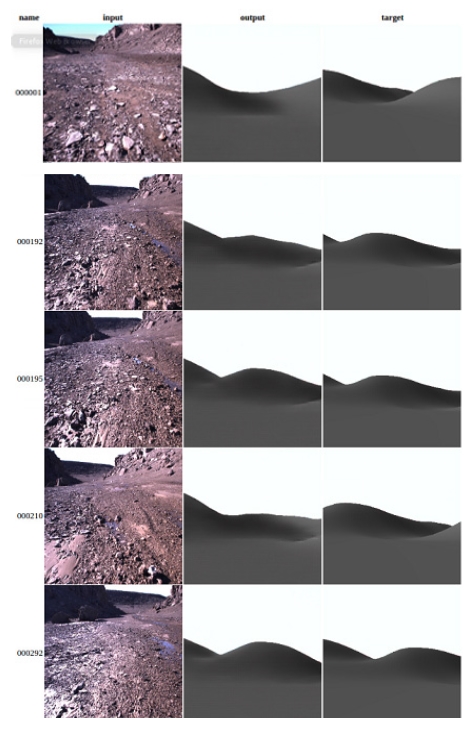

The evolution of the log likelihood function can be seen in [fig. 3] and the examples of generator output can be seen in [fig. 4].

Loss function (inverse of log likelihood function) evolution with training iterations. Left: Discriminator loss function. Right: Generator loss function

Examples of input rover images (left), generator’s “fake” DEM simulated images (middle), and actual “true” DEM simulated images (right)

We then assumed a VO error bound of 100m around each ground truth location. We divided the error bound into 100x100x180 Tx, Ty, Rz grid cells. For each cell, we rendered DEM-simulated images. We used discriminator to evaluate each cell and the best match is selected as optimal estimated global location of the rover. [Table 1] shows the accuracy of proposed algorithm as well as L1-loss between estimated location and ground truth.

4. Conclusion

In this paper, we proposed a novel algorithm for global localization of a planetary rover. We used cGAN to train a CNN discriminator to extract deep representations of rover 2D image and DEM rendered images; and use them for estimating a matching probability. To overcome challenging artifacts of rover image and/or DEM rendered images, we used a generator to generate “false” DEM simulated images and help train discriminator further. This approach elevates the requirement for massive training dataset which may not be attainable for interplanetary environments. We divide pose searching space defined by VO error bound into a grid and query rover optimal pose by evaluating the discriminator at each cell using rover image and DEM rendered image at this cell perspective. Experimental results demonstrate the training performance, generated samples, and accuracy of our proposed approach.

Acknowledgments

This research was supported in part by the “Space Initiative Program” of National Research Foundation (NRF) of Korea (NRF-2013M1A3A 3A02042335), sponsored by the Korean Ministry of Science, ICT and Planning (MSIP), in part, by the “3D Visual Recognition Project” of Korea Evaluation Institute of Industrial Technology (KEIT) (2015-10060160), in part, by the “Robot Industry Fusion Core Technology Development Project” of KEIT (R0004590), and in part by, the KIAT under the Robot industry fusion core technology development project (R0004590) supervised by the KEIT. This research was also supported by Basic Science Research Program through the National Research Foundation of Korea(NRF) funded by the Ministry of Education (2017R1A6A3A11036554).

References

- P.J.F. Carle, P.T. Furgale, T.D. Barfoot, Long-range rover localization by matching LIDAR scans to orbital elevation maps., J. Field Robot., (2010), 27(3), p344-370.

-

F. Stein, G. Medioni, Map-based localization using the panoramic horizon., IEEE Trans. Robot. Autom., (1995), 11(6), p892-896.

[https://doi.org/10.1109/70.478436]

-

F. Cozman, E. Krotkov, Automatic mountain detection and pose estimation for teleoperation of lunar rovers, International Conference on Robotics and Automation, Albuquerque, NM, USA, p2452-2457, (1997).

[https://doi.org/10.1109/robot.1997.619329]

- F. Cozman, E. Krotkov, C. Guestrin, Outdoor visual position estimation for planetary rovers., Auton. Robots, (2000), 9(2), p135-150.

-

L. Wei, S. Lee, 3D peak based long range rover localization, 2016 7th International Conference on Mechanical and Aerospace Engineering, (2016), London, UK.

[https://doi.org/10.1109/icmae.2016.7549610]

-

J. Kim, S. Lee, Extracting major lines by recruiting zero-threshold canny edge links along sobel highlights., IEEE Signal Process. Lett., (2015), 22(10), p1689-1692.

[https://doi.org/10.1109/lsp.2015.2400211]

-

Y. Tian, C. Chen, M. Shah, Cross-View Image Matching for Geo-localization in Urban Environments, (2017).

[https://doi.org/10.1109/cvpr.2017.216]

-

T-Y. Lin, Y. Cui, S. Belongie, J. Hays, Learning deep representations for ground-to-aerial geolocalization, 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, p5007-5015, (2015).

[https://doi.org/10.1109/cvpr.2015.7299135]

-

S. Workman, R. Souvenir, N. Jacobs, Wide-area image geolocalization with aerial reference imagery., Proceedings of the IEEE International Conference on Computer Vision, (2015).

[https://doi.org/10.1109/iccv.2015.451]

-

P. Isola, J-Y. Zhu, T. Zhou, A.A. Efros, Image-to-Image Translation with Conditional Adversarial Networks, 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, p5967-5976, (2017).

[https://doi.org/10.1109/cvpr.2017.632]

-

O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A.C. Berg, L. Fei-Fei, Imagenet large scale visual recognition challenge., Int. J. Comput. Vis., (2015), 115(3), p211-252.

[https://doi.org/10.1007/s11263-015-0816-y]

- B. Zhou, A. Lapedriza, J. Xiao, A. Torralba, A. Oliva, Learning deep features for scene recognition using places database., Adv. Neural Inf. Process. Syst., (2014), 27, p487-495.

-

P. Furgale, P. Carle, J. Enright, T.D. Barfoot, The Devon Island rover navigation dataset., Int. J. Robot. Res., (2012), 31(6), p707-713.

[https://doi.org/10.1177/0278364911433135]

2005 Electronics & Electrical Communication Engineering, Cairo University, Egypt (BSc.)

2014 Information and Communication Engineering Sungkyunkwan University (MSc.) Area of Interest: Visual Recognition and Machine Learning

2016 Information technology, PTIT, Vietnam (BSc.)

Area of Interest: Computer Vision, Deep Learning, Artificial Intelligence

2008 Electrical and Electronics Engineering, UET, Pakistan (BSc.)

From 20013 Information and Communication Engineering Sungkyunkwan University (PhD. Candidate)

Area of Interest: Machine Learning and Artificial Intelligence

2002 Information and Communication Engineering Sungkyunkwan University (BSc.)

2007 Information and Communication Engineering Sungkyunkwan University (MSc.)

2015 Information and Communication Engineering Sungkyunkwan University (PhD. In Computer Science Engineering)

Area of Interest: Visual Recognition, Pattern Recognition, Image Processing, and Machine Learning